A Just Culture – The Foundation of Any Successful Safety Management System

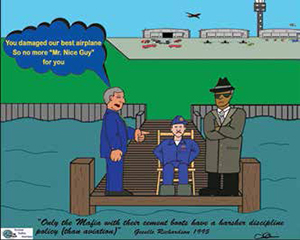

Every industry has its own culture and aviation is no different. Our industry is known as being unforgiving for any human errors made, especially if the error involved damage to an aircraft. Giselle Richardson, a close psychologist friend of mine, was the keynote speaker at our first conference on maintenance errors and their prevention in 1995. One thing she said that has stuck in my mind to this day was: “Only the mafia with their cement boots has a harsher discipline policy (than aviation)”. Thinking on my many years in aviation, she was right. I can recall going to work one day and asking where Joe was. It turns out he was fired on my days off and not one person knew why. It was later rumored that he had left his flashlight up behind the instrument panel which later had fallen out on the captain’s foot during pushback from the gate, resulting in a delay.

Was it cement boots for Joe? Let’s look at some of the cultures that can be found in our industry.

Any employee who through their carelessness causes an accident, injury or damage in excess of $1,000 will be terminated

The era of the “Blame” Culture.

This culture has been common throughout history.

It is based on the “eye for an eye” way of thinking.

It is seen to be doing something about the problem.

It seems to “solve” the problem, as the instigator of the problem is no longer there to do it again.

It does not determine or solve the root causes of the problem.

It causes people to try to hide their errors.

It creates a lose-lose situation and will doom an SMS to failure.

It depends upon the Circumstances

The era of the “It Depends” Culture

No one knows what will happen when an error is made.

It could depend on the mood of the boss that day.

It likely will depend on how expensive the error is and how much media attention it gets.

It fails to determine the root causes and results in an “it wasn’t me” culture, much like the blame culture.

It is a lose-lose situation and will also doom an SMS to failure.

Regardless of your action, if you report your error and assist in its prevention, no disciplinary action will be taken

The era of the “No Blame” culture

This enables an organization to learn from the error and root causes to be found.

It lessens the chances of a repeat error being made.

The data helps find trends and system causes.

However, it interferes with one’s sense of justice — i.e., that feeling that he/she “just got away with murder.” (Coming to work drunk and driving the service truck into the side of an aircraft is OK?)

It makes no provision for reckless behavior.

A form of this just culture is a must for a successful SMS.

All errors must be reported and will be treated as “Learning Outcomes” except in cases of Reckless Error

What is a Just Culture?

It is where everyone feels that the guilty party was treated fairly and justly after they made a human error or reported a near miss.

It clearly spells out that all errors will be treated as learning outcomes and — except for cases of reckless behavior — no discipline will be administered.

It is a win-win situation and is the foundation of any successful SMS.

What is an Administrative Policy?

An administrative policy is one that spells out exactly how an error or reported near miss will be handled. It will inform all exactly where that line in the sand is when it comes to discipline. The administraton of discipline will become a small part of the overall policy.

The policy is based the following suppositions:

a) Errors are not made on purpose (if they were, it would be sabotage);

b) The person making the error is the least likely to ever make it again;

c) Disciplining a person usually does nothing positive to reduce a repeat of the error if it was not intentional;

d) The policy will become the heart of your SMS.

It has been determined that discipline will not be required to help prevent a repeat of the error 95 to 97 percent of the time.

Based on work done by David Marx, the father of just culture, the following are the three types of error that must be dealt with.

1. Normal Error - No Culpability = Learning Outcome (Console)

Normal errors are the result of being human and/or the system in which the person works. It is the unintentional forgetting to do something or doing something wrong while thinking it was right. There is no intention to make the error. You might later think to yourself, “How could I have been so stupid?” Maybe you forget to replace the oil cap after checking the oil level, or install a component wrong because you have never had the training (system error) and the manual is ambiguous but it seems right.

The end result is a learning outcome in which the knowledge gained is used to devise ways to prevent it from recurring to anyone.

About 80 percent of all errors will fall into this category.

2. At-Risk Error - No culpability (this time) = Learning Outcome (Coach)

At-risk errors are the result of the person knowing what he/she is doing is wrong but seeing no bad outcomes and often positive rewards for the action. The key is that the person fails to see or realize the risk in what they are doing. Norms (the way we do things around here) often result in an at-risk error.

Remember last month’s Mickey Mouse and Donald Duck “pencil whipping” for the tire pressures on the DC8s? That was a norm that ultimately resulted in the loss of 261 lives. Because the people were not aware of the risk in what they were doing, they would receive one “get out of jail free” card and be coached to realize the danger or risk. Should the person repeat the error when they realize the risk, the error falls into the reckless error category.

The classic norm that was at risk has to be American Airlines DC-10 Flight 191 out of Chicago that lost the No. 1 engine on takeoff. Two hundred and seventy three people died as a result of maintenance using a forklift to remove and reinstall the wing-mounted engines with the pylon attached, instead of separating the engine from the pylon before removing the pylon. This was an at-risk error as what they were doing was a violation. They failed to see the risk but had the positive reward of saving 22 man hours per engine. To my knowledge, nobody was ever charged (disciplined) for this at-risk error.

3. Reckless Error - Culpable = Learning Outcome (Discipline)

Reckless error results from an action that the person knows has a significant and unjustifiable risk but they choose to do it anyway, consciously disregarding the possible consequences. The best example of this is the drunk who chooses to drive. The person knows the risk but chooses to do it anyway. An aviation example would be the maintenance person who realizes he forgot his flashlight up behind the instrument panel and decides to say nothing. There can still be a learning outcome as to why the person chose to take that risk but discipline might be required to ensure that it does not happen again.

How do you tell the difference?

You will need to answer the following questions.

1. Was the act deliberate with a reasonable knowledge of the consequences? Yes = reckless

2. Has the person made similar errors in the past? Yes = reckless

3. Do they accept responsibility for their actions? Yes = likely at risk

4. Has the person learned from the experience? Yes = likely at risk

5. Are they likely to do it again? Yes = reckless

The only purpose of discipline has to be to ensure that it does not happen again.

There will be those who will have trouble agreeing with this culture change and will hide behind the “he/she must be held accountable” adage. These are the remnants of the blame (cement boot) culture. This is especially true of the at-risk error wherein the error was made knowingly but the possible consequences weren’t.

At-risk errors only occur about 15 percent of the time, leaving reckless errors with the outcome of discipline responsible for only five percent. Who would have ever thought it would be that low? In a truly just culture it will be and the cement boots will be a thing of the past.

In the next article I’ll start discussing the “Dirty Dozen,” starting with No. 12, norms, as it ties in with my last few articles.

Please send any comments good, bad or even down-right ugly that you wish, as I am open to discussing any human error topic that you feel is important.

Gordon Dupont worked as a special programs coordinator for Transport Canada from March 1993 to August 1999. He was responsible for coordinating with the aviation industry in the development of programs that would serve to reduce maintenance error. He assisted in the development of Human Performance in Maintenance (HPIM) Parts 1 and 2. The “Dirty Dozen” maintenance safety posters were an outcome of HPIM Part 1.

Gordon Dupont worked as a special programs coordinator for Transport Canada from March 1993 to August 1999. He was responsible for coordinating with the aviation industry in the development of programs that would serve to reduce maintenance error. He assisted in the development of Human Performance in Maintenance (HPIM) Parts 1 and 2. The “Dirty Dozen” maintenance safety posters were an outcome of HPIM Part 1.

Prior to working for Transport, Dupont worked for seven years as a technical investigator for the Canadian Aviation Safety Board (later to become the Canadian Transportation Safety Board). He saw firsthand the tragic results of maintenance and human error.

Dupont has been an aircraft maintenance engineer and commercial pilot in Canada, the United States and Australia. He is the past president and founding member of the Pacific Aircraft Maintenance Engineers Association. He is a founding member and a board member of the Maintenance And Ramp Safety Society (MARSS).

Dupont, who is often called “The Father of the Dirty Dozen,” has provided human factors training around the world. He retired from Transport Canada in 1999 and is now a private consultant. He is interested in any work that will serve to make our industry safer. Visit www.system-safety.comfor more information.